This project is the culmination of our work in the "ECE484: Principles of Safe Autonomy" course at UIUC, undertaken by a team of four members. The primary objective is to design a purely vision-based autonomous navigation system capable of real-time lane following while ensuring a smooth and reliable driving experience across various real-world scenarios. The system is specifically designed to handle three critical situations: stop sign detection, pedestrian detection, and navigating through road work zones.

Introduction

Imagine sitting in a vehicle that effortlessly takes you anywhere without the need for

manual operation, autonomous vehicles have the potential to turn this vision into reality.

A key component of such systems is a robust navigation system capable of reliable lane

following.

In this project, we focus on developing a purely vision-based navigation system, aiming to

eliminate dependence on costly sensors like LiDAR, thereby making autonomous technology more

accessible and scalable.

Our system is designed to perform real-time lane following while seamlessly handling

critical scenarios such as stop sign detection, pedestrian avoidance, and navigating road

work zones, showcasing its potential to enhance safety and adaptability across diverse

driving environments.

Approaches

Hardware Architecture

The whole system is based on ROS Noetic. All the nodes are running on a companion computer equipped on the rover.> GEMe2 Vehicle

The following figure 1. is the hardware architecture of the GEMe2 Vehicle:

Figure 1. Hardware architecture

"AStuff Spectra2 Computer" (with CPU - Intel Xeon E-2278G 3.40GHz x16" and two GPUs - NVIDIA

RTX A4000) is the companion computer of the vehicle which deals with different signals from

sensors.

Multiple sensors were equipped on the vehicle.

The primary sensor utilized in this project is the ZED2 stereo camera, mounted at the front

of the vehicle.

It delivers a high-resolution 1080p video stream, serving as the primary source of

information for the system’s vision-based navigation and environment analysis.

For more information about GEMe2, please visit:

https://publish.illinois.edu/robotics-autonomy-resources/gem-e2/

Software Architecture

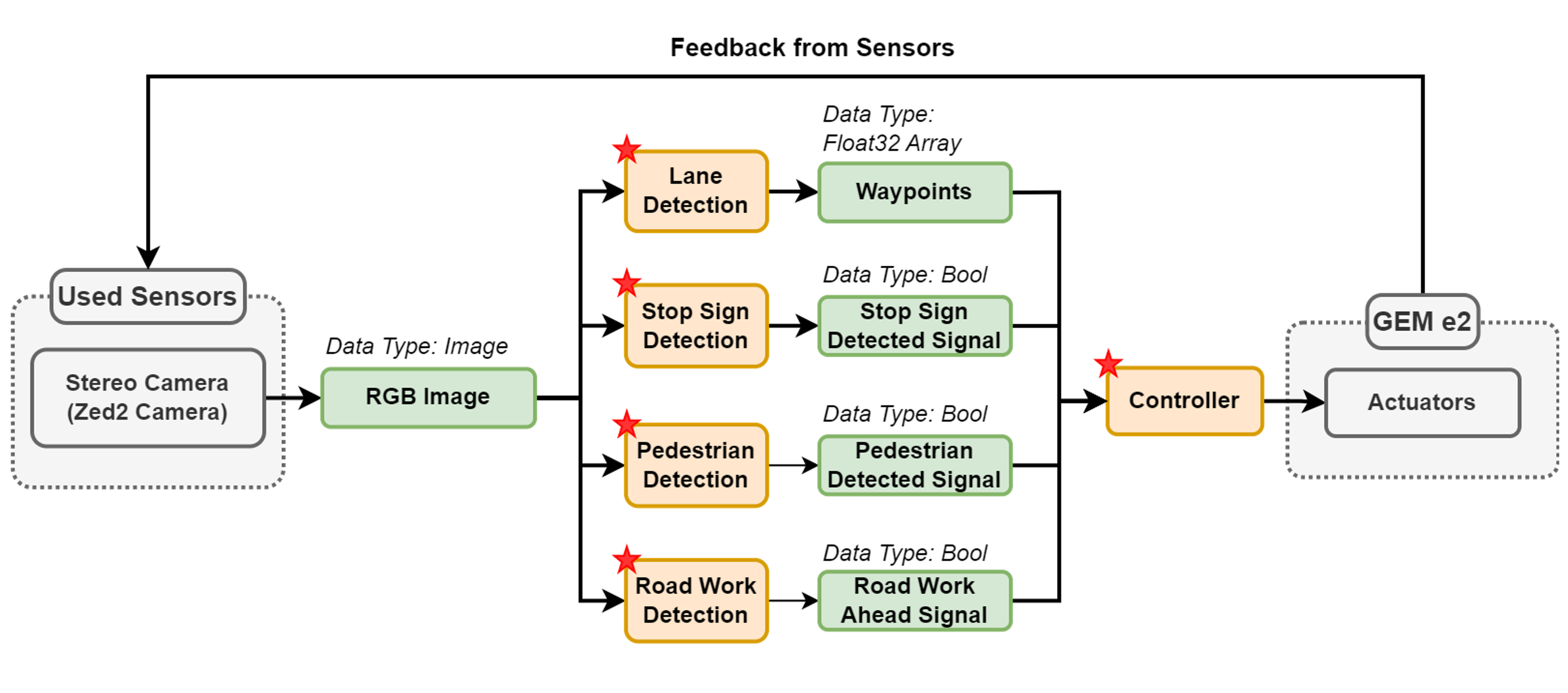

Figure 2. Software architecture of the system.

Figure 2 illustrates the overall software architecture of the system. As mentioned previously, the whole project is based on pure vision. Thus, the ZED2 camera is the only sensor we are using. There are three core components in the system: lane detection, vehicle control, and event handling in real-world scenarios.

> 1.Lane Detection

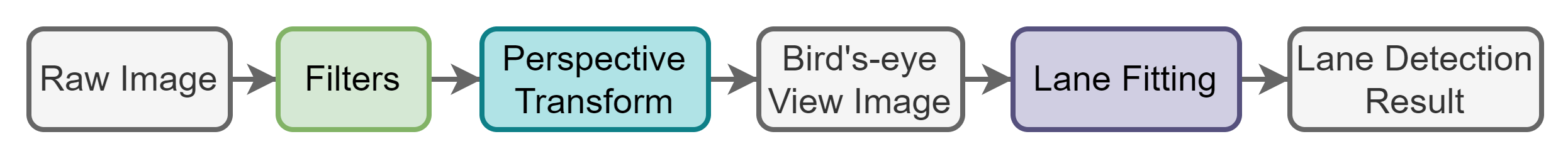

The overall workflow for lane detection is depicted in Figure 3.

Figure 3. Workflow of lane detection.

The process begins with capturing raw RGB images from the camera, which are then preprocessed using various filters to remove irrelevant details and noise. Next, the filtered image is transformed into a bird's-eye view using perspective transformation, providing a clear and top-down perspective of the road. Finally, lane information is extracted through a lane-fitting algorithm, enabling precise detection and mapping of the lanes.

-> Filters

For the filtering process, we employ a combination of three methods to accurately identify the final lane area: gradient filtering, color filtering, and YOLOPv2. The gradient filter utilizes Canny Edge Detection, which is preprocessed with Gaussian blur to reduce noise and post-processed with morphological dilation to enhance lane thickness and continuity. For color filtering, we use the HLS color space to isolate white lane markings and apply Contrast Limited Adaptive Histogram Equalization (CLAHE) to address uneven lighting conditions and improve visibility. YOLOPv2, a multi-task deep learning network derived from YOLOP, is adept at perceiving lanes and other road features. However, its high sensitivity can result in detecting extraneous areas. To counter this, we integrate the output of YOLOPv2 with the results of the gradient and color filters, ensuring a refined and accurate final lane detection.

Figure 4. Results of the filters.

[Note] Top-left: YOLOPv2 / Top-right: Color filter

/ Down-left: Final output / Down-right: Gradient filter.

-> Perspective Transform

In this step, the image is transformed into a bird's-eye view, providing a top-down perspective of the road. This process involves identifying the locations of four key points in the original image, which define the region of interest, and mapping them to corresponding points in the bird's-eye view. These four points are adjusted to form the corners of the transformed image, ensuring an accurate geometric representation of the lanes on a 2D plane.

-> Lane Fitting Function

In the previous step, we obtain binary bird’s-eye view images that distinguish lane pixels from the background. The image is then horizontally segmented into several layers, and the lane centers are identified for each layer. To achieve this, we employ a histogram-based method. For each horizontal layer, we calculate the histogram of pixels in both the left and right halves of the image. The areas with the highest pixel density are considered the centroids of each lane. Finally, the coordinates of these lane centers are fitted to a second-order polynomial, providing a smooth and accurate representation of the lane boundaries.

> 2.Controller

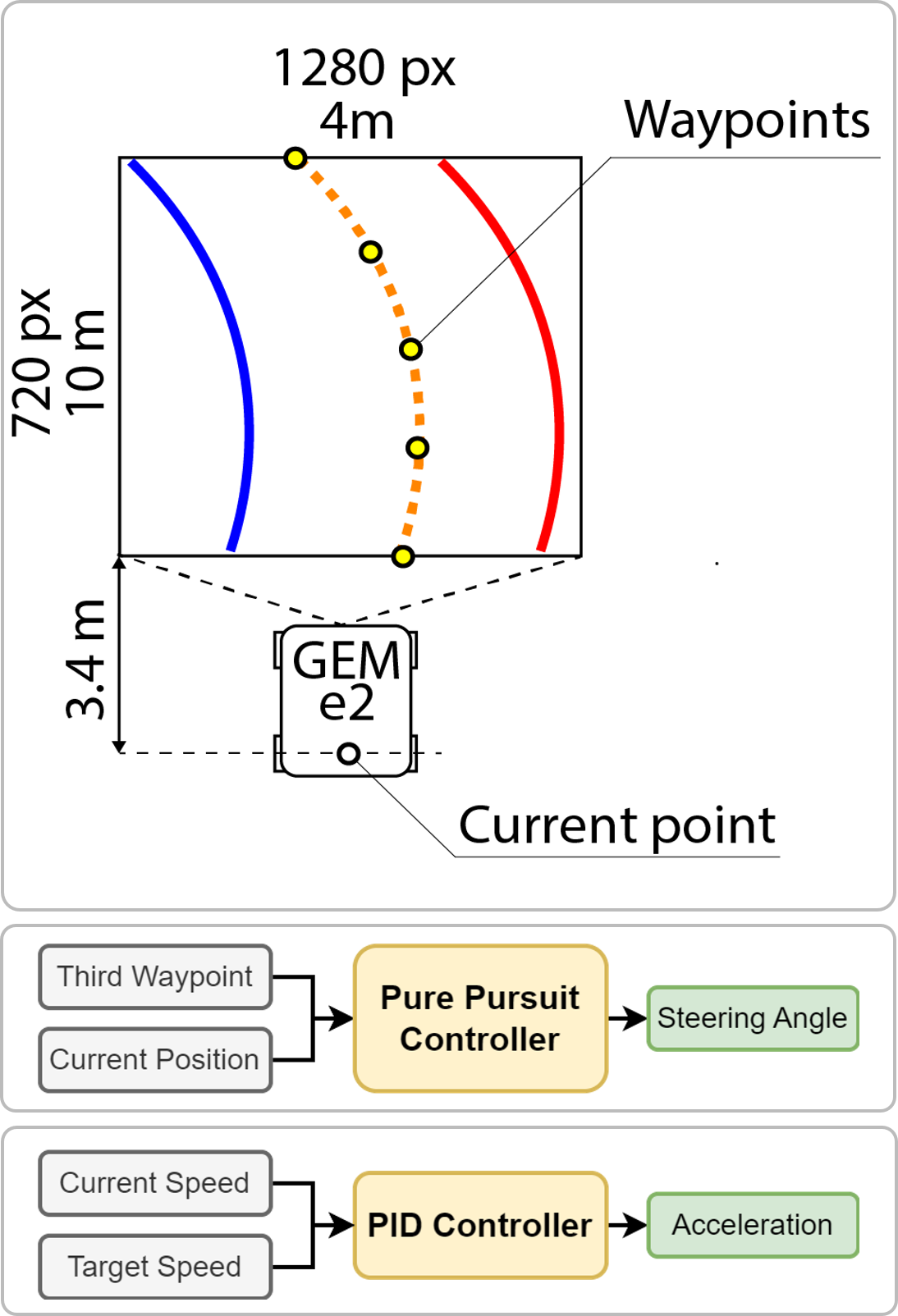

The controller section of the system comprises three key components to ensure precise lane following:

-> Waypoint Generation

The process begins with detecting the lane boundaries in the image. The lane's midline is then divided into four equal segments, generating five waypoints. The vehicle’s current position is determined by calculating the ratio between a pixel in the image and the corresponding real-world distance on the ground.

-> Steering Control

A Pure Pursuit controller is used to calculate the steering angle needed to follow the lane accurately. This controller identifies the steering angle required to reach a designated target waypoint, positioned ahead of the vehicle, ensuring smooth and precise lane following.

-> Speed Control

A PID (Proportional-Integral-Derivative) controller is implemented to manage the vehicle's speed. It adjusts the acceleration or deceleration necessary to maintain the desired speed, ensuring consistent and optimal performance. By combining these control mechanisms, the system achieves both accurate steering and precise speed regulation, enabling smooth, safe, and efficient lane following.

> 3.Real-World Scenarios Detection

In this section, we focus on detecting and responding to critical real-world driving

scenarios using a cutting-edge object detection model called YOLO v8. YOLO v8, developed by

Ultralytics, is renowned for its speed, accuracy, and ease of use. It leverages pre-trained

models to quickly and efficiently identify objects within images or video streams.

Our system utilizes YOLO v8 to detect three key scenarios:

- [1] Stop Sign Detection:

The model is trained to recognize stop signs in real-time. Upon

detecting a stop sign, the system triggers a braking mechanism to bring the vehicle to a

complete halt.

- [2] Pedestrian Avoidance:

The system can detect pedestrians in the vehicle's path.

When a

pedestrian is detected, the system activates an avoidance maneuver, such as steering around

the pedestrian or slowing down significantly.

- [3] Road Work Zone Navigation:

The system can identify warning cones or other markers indicating

road work zones. Upon detection, the system adjusts the vehicle's path to safely navigate

through the work zone, potentially slowing down or changing lanes as necessary.

By integrating YOLO v8 into our autonomous navigation system, we can enhance its safety and

reliability by enabling it to proactively respond to challenging real-world driving

situations. This system demonstrates the power of advanced object detection models in

creating more robust and intelligent autonomous vehicles.

Results

In summary, this project successfully demonstrates the ability to achieve lane following on a full track, both in Gazebo simulation and in the real world. The system is capable of navigating the lane, detecting stop signs, and identifying pedestrians, with the vehicle coming to a stop when necessary. After a brief pause, the vehicle resumes motion once no additional obstacles are detected. Additionally, the system can intelligently plan and adjust its path when encountering road work zones, ensuring safe navigation through such areas. This project showcases the effectiveness of a vision-based autonomous driving system in real-world scenarios.

> Quick Demo

> Full Presentation

Project Code

> For the whole project code, please visit: https://github.com/htliang517/AutoGem